This detailed step-by-step guide shows you how to install the latest Hadoop v3.3.0 on Windows 10. It leverages Hadoop 3.3.0 winutils tool. WLS (Windows Subsystem for Linux) is not required. This version was released on July 14 2020. It is the first release of Apache Hadoop 3.3 line. There are significant changes compared with Hadoop 3.2.0, such as Java 11 runtime support, protobuf upgrade to 3.7.1, scheduling of opportunistic containers, non-volatile SCM support in HDFS cache directives, etc.

Please follow all the instructions carefully. Once you complete the steps, you will have a shiny pseudo-distributed single node Hadoop to work with.

The yellow elephant logo is a registered trademark of Apache Hadoop; the blue window logo is registered trademark of Microsoft.

References

Refer to the following articles if you prefer to install other versions of Hadoop or if you want to configure a multi-node cluster or using WSL.

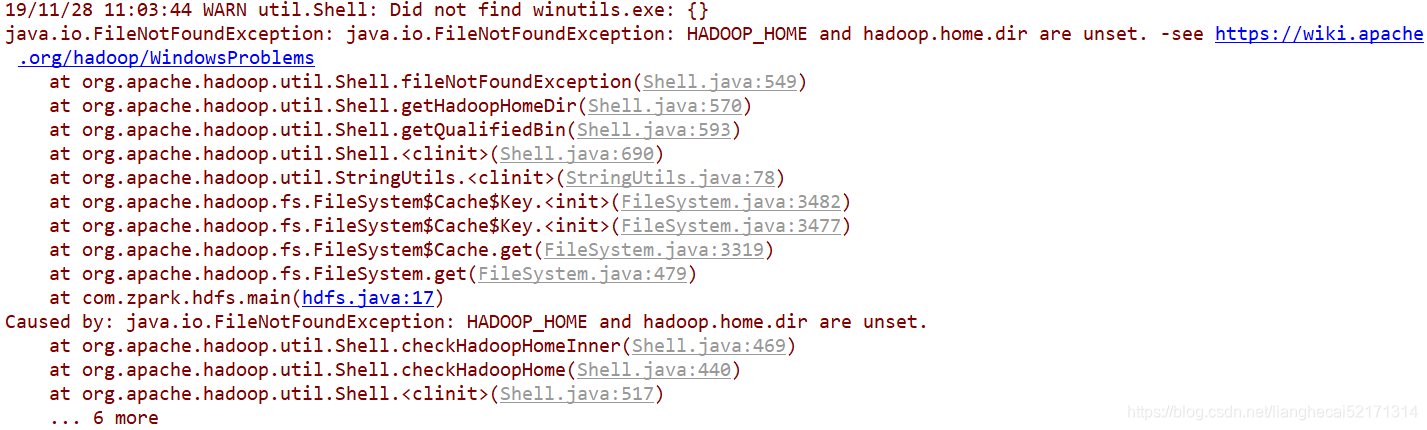

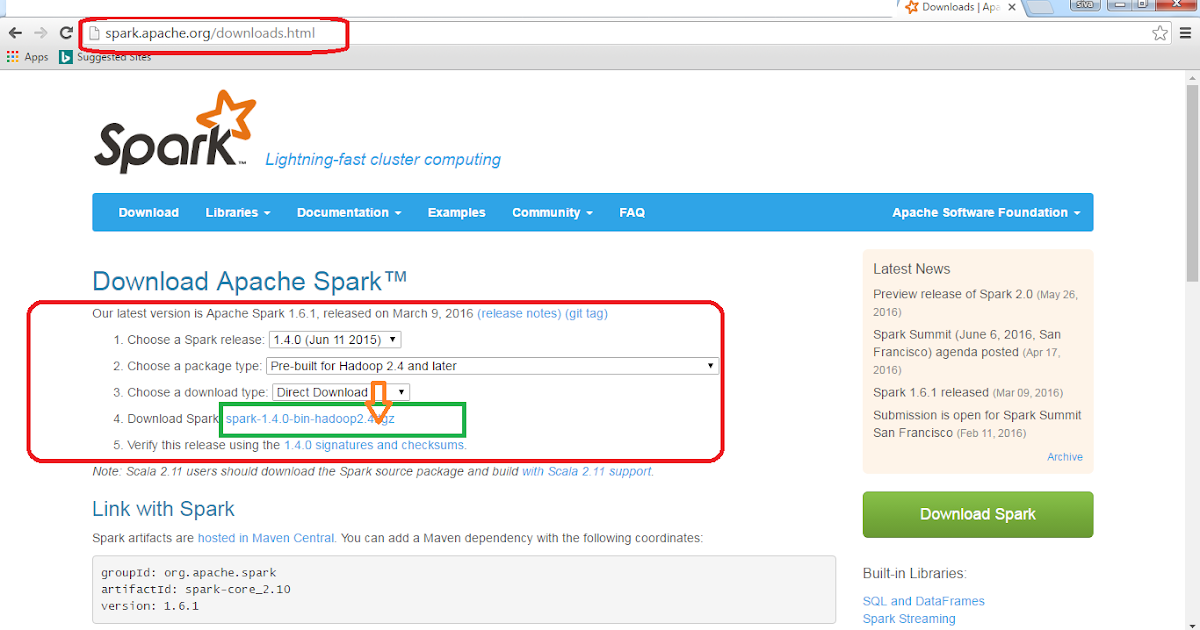

Download winutils.exe from the following link. This particular link will redirect you to GitHub and your winutils.exe must download from this. Once your WinUtils.exe is downloaded, try to set your Hadoop Home by editing your Hadoop environmental variables. You can get the sources from this following link. However winutils.exe is a tool created to help if you don't plan to use Hadoop in order to perform distrubite computing, for example because you are only testing Spark locally, on Windows. Press WIN+PAUSE, go to Advanced Settings and Environment variables. May 02, 2016 Apache Hadoop releases do not contain binaries like hadoop.dll or winutils.exe, which are required to run hadoop. In order to use it on Windows, the distribution must be compiled from its sources. Installing winutils. Let’s download the winutils.exe and configure our Spark installation to find winutils.exe. A) Create a hadoop bin folder inside the SPARKHOME folder. B) Download the winutils.exe for the version of hadoop against which your Spark installation was built for. In my case the hadoop version was 2.6.0. 2018-06-04 20:23:33. See full list on github.com.

- Install Hadoop 3.3.0 on Windows 10 using WSL (Windows Subsystems for Linux is requried)

Required tools

Before you start, make sure you have these following tools enabled in Windows 10.

| Tool | Comments |

| PowerShell | We will use this tool to download package. In my system, PowerShell version table is listed below: |

| Git Bash or 7 Zip | We will use Git Bash or 7 Zip to unzip Hadoop binary package. You can choose to install either tool or any other tool as long as it can unzip *.tar.gz files on Windows. |

| Command Prompt | We will use it to start Hadoop daemons and run some commands as part of the installation process. |

| Java JDK | JDK is required to run Hadoop as the framework is built using Java. In my system, my JDK version is jdk1.8.0_161. Check out the supported JDK version on the following page. From Hadoop 3.3.0, Java 11 runtime is now supported. |

Now we will start the installation process.

Step 1 - Download Hadoop binary package

Select download mirror link

Go to download page of the official website:

And then choose one of the mirror link. The page lists the mirrors closest to you based on your location. For me, I am choosing the following mirror link:

Download the package

Open PowerShell and then run the following command lines one by one:

It may take a few minutes to download.

Once the download completes, you can verify it:

You can also directly download the package through your web browser and save it to the destination directory.

Step 2 - Unpack the package

Now we need to unpack the downloaded package using GUI tool (like 7 Zip) or command line. For me, I will use git bash to unpack it.

Open git bash and change the directory to the destination folder:

And then run the following command to unzip:

The command will take quite a few minutes as there are numerous files included and the latest version introduced many new features.

After the unzip command is completed, a new folder hadoop-3.3.0 is created under the destination folder.

Please ignore it for now.

Step 3 - Install Hadoop native IO binary

Hadoop on Linux includes optional Native IO support. However Native IO is mandatory on Windows and without it you will not be able to get your installation working. The Windows native IO libraries are not included as part of Apache Hadoop release. Thus we need to build and install it.

https://github.com/kontext-tech/winutils

Download all the files in the following location and save them to the bin folder under Hadoop folder. For my environment, the full path is: F:big-datahadoop-3.3.0bin. Remember to change it to your own path accordingly.

Alternatively, you can run the following commands in the previous PowerShell window to download:

After this, the bin folder looks like the following:

Step 4 - (Optional) Java JDK installation

Java JDK is required to run Hadoop. If you have not installed Java JDK, please install it.

You can install JDK 8 from the following page:

Once you complete the installation, please run the following command in PowerShell or Git Bash to verify:

If you got error about 'cannot find java command or executable'. Don't worry we will resolve this in the following step.

Step 5 - Configure environment variables

Now we've downloaded and unpacked all the artefacts we need to configure two important environment variables.

Configure JAVA_HOME environment variable

As mentioned earlier, Hadoop requires Java and we need to configure JAVA_HOME environment variable (though it is not mandatory but I recommend it).

First, we need to find out the location of Java SDK. In my system, the path is: D:Javajdk1.8.0_161.

Your location can be different depends on where you install your JDK.

And then run the following command in the previous PowerShell window:

Remember to quote the path especially if you have spaces in your JDK path.

The output looks like the following:

Configure HADOOP_HOME environment variable

Similarly we need to create a new environment variable for HADOOP_HOME using the following command. The path should be your extracted Hadoop folder. For my environment it is: F:big-datahadoop-3.3.0.

If you used PowerShell to download and if the window is still open, you can simply run the following command:

The output looks like the following screenshot:

Alternatively, you can specify the full path:

Now you can also verify the two environment variables in the system:

Configure PATH environment variable

Once we finish setting up the above two environment variables, we need to add the bin folders to the PATH environment variable.

If PATH environment exists in your system, you can also manually add the following two paths to it:

- %JAVA_HOME%/bin

- %HADOOP_HOME%/bin

Alternatively, you can run the following command to add them:

If you don't have other user variables setup in the system, you can also directly add a Path environment variable that references others to make it short:

Close PowerShell window and open a new one and type winutils.exe directly to verify that our above steps are completed successfully:

You should also be able to run the following command:

Step 6 - Configure Hadoop

Now we are ready to configure the most important part - Hadoop configurations which involves Core, YARN, MapReduce, HDFS configurations.

Configure core site

Edit file core-site.xml in %HADOOP_HOME%etchadoop folder. For my environment, the actual path is F:big-datahadoop-3.3.0etchadoop.

Replace configuration element with the following:

Configure HDFS

Edit file hdfs-site.xml in %HADOOP_HOME%etchadoop folder.

Before editing, please correct two folders in your system: one for namenode directory and another for data directory. For my system, I created the following two sub folders:

- F:big-datadatadfsnamespace_logs_330

- F:big-datadatadfsdata_330

Replace configuration element with the following (remember to replace the highlighted paths accordingly):

In Hadoop 3, the property names are slightly different from previous version. Refer to the following official documentation to learn more about the configuration properties:

Configure MapReduce and YARN site

Edit file mapred-site.xml in %HADOOP_HOME%etchadoop folder.

Replace configuration element with the following:

Edit fileyarn-site.xml in %HADOOP_HOME%etchadoop folder.

Step 7 - Initialise HDFS & bug fix

Run the following command in Command Prompt

The following is an example when it is formatted successfully:

Step 8 - Start HDFS daemons

Run the following command to start HDFS daemons in Command Prompt:

Verify HDFS web portal UI through this link: http://localhost:9870/dfshealth.html#tab-overview.

You can also navigate to a data node UI:

Step 9 - Start YARN daemons

Alternatively, you can follow this comment on this page which doesn't require Administrator permission using a local Windows account:

https://kontext.tech/column/hadoop/377/latest-hadoop-321-installation-on-windows-10-step-by-step-guide#comment314

Run the following command in an elevated Command Prompt window (Run as administrator) to start YARN daemons:

You can verify YARN resource manager UI when all services are started successfully.

Step 10 - Verify Java processes

Run the following command to verify all running processes:

The output looks like the following screenshot:

* We can see the process ID of each Java process for HDFS/YARN.

Step 11 - Shutdown YARN & HDFS daemons

You don't need to keep the services running all the time. You can stop them by running the following commands one by one once you finish the test:

Let me know if you encounter any issues. Enjoy with your latest Hadoop on Windows 10.

This detailed step-by-step guide shows you how to install the latest Hadoop v3.3.0 on Windows 10. It leverages Hadoop 3.3.0 winutils tool. WLS (Windows Subsystem for Linux) is not required. This version was released on July 14 2020. It is the first release of Apache Hadoop 3.3 line. There are significant changes compared with Hadoop 3.2.0, such as Java 11 runtime support, protobuf upgrade to 3.7.1, scheduling of opportunistic containers, non-volatile SCM support in HDFS cache directives, etc.

Please follow all the instructions carefully. Once you complete the steps, you will have a shiny pseudo-distributed single node Hadoop to work with.

The yellow elephant logo is a registered trademark of Apache Hadoop; the blue window logo is registered trademark of Microsoft.

References

Refer to the following articles if you prefer to install other versions of Hadoop or if you want to configure a multi-node cluster or using WSL.

- Install Hadoop 3.3.0 on Windows 10 using WSL (Windows Subsystems for Linux is requried)

Required tools

Before you start, make sure you have these following tools enabled in Windows 10.

| Tool | Comments |

| PowerShell | We will use this tool to download package. In my system, PowerShell version table is listed below: |

| Git Bash or 7 Zip | We will use Git Bash or 7 Zip to unzip Hadoop binary package. You can choose to install either tool or any other tool as long as it can unzip *.tar.gz files on Windows. |

| Command Prompt | We will use it to start Hadoop daemons and run some commands as part of the installation process. |

| Java JDK | JDK is required to run Hadoop as the framework is built using Java. In my system, my JDK version is jdk1.8.0_161. Check out the supported JDK version on the following page. From Hadoop 3.3.0, Java 11 runtime is now supported. |

Now we will start the installation process.

Step 1 - Download Hadoop binary package

Select download mirror link

Go to download page of the official website:

And then choose one of the mirror link. The page lists the mirrors closest to you based on your location. For me, I am choosing the following mirror link:

Download the package

Open PowerShell and then run the following command lines one by one:

It may take a few minutes to download.

Once the download completes, you can verify it:

You can also directly download the package through your web browser and save it to the destination directory.

Step 2 - Unpack the package

Now we need to unpack the downloaded package using GUI tool (like 7 Zip) or command line. For me, I will use git bash to unpack it.

Open git bash and change the directory to the destination folder:

And then run the following command to unzip:

The command will take quite a few minutes as there are numerous files included and the latest version introduced many new features.

After the unzip command is completed, a new folder hadoop-3.3.0 is created under the destination folder.

Please ignore it for now.

Step 3 - Install Hadoop native IO binary

Hadoop on Linux includes optional Native IO support. However Native IO is mandatory on Windows and without it you will not be able to get your installation working. The Windows native IO libraries are not included as part of Apache Hadoop release. Thus we need to build and install it.

https://github.com/kontext-tech/winutils

Download all the files in the following location and save them to the bin folder under Hadoop folder. For my environment, the full path is: F:big-datahadoop-3.3.0bin. Remember to change it to your own path accordingly.

Alternatively, you can run the following commands in the previous PowerShell window to download:

After this, the bin folder looks like the following:

Step 4 - (Optional) Java JDK installation

Java JDK is required to run Hadoop. If you have not installed Java JDK, please install it.

You can install JDK 8 from the following page:

Once you complete the installation, please run the following command in PowerShell or Git Bash to verify:

If you got error about 'cannot find java command or executable'. Don't worry we will resolve this in the following step.

Step 5 - Configure environment variables

Now we've downloaded and unpacked all the artefacts we need to configure two important environment variables.

Configure JAVA_HOME environment variable

Apache Spark - Hadoop.dll, Winutils.exe And Multiiple ...

As mentioned earlier, Hadoop requires Java and we need to configure JAVA_HOME environment variable (though it is not mandatory but I recommend it).

First, we need to find out the location of Java SDK. In my system, the path is: D:Javajdk1.8.0_161.

Your location can be different depends on where you install your JDK.

And then run the following command in the previous PowerShell window:

Remember to quote the path especially if you have spaces in your JDK path.

The output looks like the following:

Configure HADOOP_HOME environment variable

Similarly we need to create a new environment variable for HADOOP_HOME using the following command. The path should be your extracted Hadoop folder. For my environment it is: F:big-datahadoop-3.3.0.

If you used PowerShell to download and if the window is still open, you can simply run the following command:

The output looks like the following screenshot:

Alternatively, you can specify the full path:

Now you can also verify the two environment variables in the system:

Configure PATH environment variable

Once we finish setting up the above two environment variables, we need to add the bin folders to the PATH environment variable.

If PATH environment exists in your system, you can also manually add the following two paths to it:

- %JAVA_HOME%/bin

- %HADOOP_HOME%/bin

Alternatively, you can run the following command to add them:

If you don't have other user variables setup in the system, you can also directly add a Path environment variable that references others to make it short:

Close PowerShell window and open a new one and type winutils.exe directly to verify that our above steps are completed successfully:

You should also be able to run the following command:

Step 6 - Configure Hadoop

Now we are ready to configure the most important part - Hadoop configurations which involves Core, YARN, MapReduce, HDFS configurations.

Configure core site

Edit file core-site.xml in %HADOOP_HOME%etchadoop folder. For my environment, the actual path is F:big-datahadoop-3.3.0etchadoop.

Replace configuration element with the following:

Configure HDFS

Edit file hdfs-site.xml in %HADOOP_HOME%etchadoop folder.

Before editing, please correct two folders in your system: one for namenode directory and another for data directory. For my system, I created the following two sub folders:

- F:big-datadatadfsnamespace_logs_330

- F:big-datadatadfsdata_330

Winutils.exe Hadoop 3.3.0

Replace configuration element with the following (remember to replace the highlighted paths accordingly):

In Hadoop 3, the property names are slightly different from previous version. Refer to the following official documentation to learn more about the configuration properties:

Configure MapReduce and YARN site

Edit file mapred-site.xml in %HADOOP_HOME%etchadoop folder.

Replace configuration element with the following:

Edit fileyarn-site.xml in %HADOOP_HOME%etchadoop folder.

Step 7 - Initialise HDFS & bug fix

Run the following command in Command Prompt

The following is an example when it is formatted successfully:

Step 8 - Start HDFS daemons

Run the following command to start HDFS daemons in Command Prompt:

Verify HDFS web portal UI through this link: http://localhost:9870/dfshealth.html#tab-overview.

You can also navigate to a data node UI:

Step 9 - Start YARN daemons

Alternatively, you can follow this comment on this page which doesn't require Administrator permission using a local Windows account:

https://kontext.tech/column/hadoop/377/latest-hadoop-321-installation-on-windows-10-step-by-step-guide#comment314

Run the following command in an elevated Command Prompt window (Run as administrator) to start YARN daemons:

You can verify YARN resource manager UI when all services are started successfully.

Step 10 - Verify Java processes

Run the following command to verify all running processes:

The output looks like the following screenshot:

* We can see the process ID of each Java process for HDFS/YARN.

Step 11 - Shutdown YARN & HDFS daemons

You don't need to keep the services running all the time. You can stop them by running the following commands one by one once you finish the test:

How To Repair The Missing Winutils.exe Error In The Big Data ...

Let me know if you encounter any issues. Enjoy with your latest Hadoop on Windows 10.